Sharing Geolocated content over WaveFederationProtocol

Thomas Wrobel (thomas@lostagain.nl)

In my paper

"Everything Everywhere", I proposed how an AR network could be developed using existing protocols and standards. I summarised the desirable properties of the system, and focused on IRC networks as a template.

At the end, I suggested the Wave Federation Protocol (WFP),an XMPP extension originally introduced by Google but now under Apache, could provide a good bases for a protocol.

In this paper I will summarize the principles and steps for using WFP to geolocate data.

Why Geolocate content using Wave?

There's quite a few reasons, but here's the main;

1. Wave is a federated decentralized system. It allows anyone to share content between just a few people *without* all them needing to be signed up to the same 3rd party server. Like e-mail, Wave allows numerous different users to communicate all on independent servers and still be assured that only those people invited see it.

Also, much like OpenID, a wave user will only need to sign in once to access secured content hosted on many WFP servers.

2. Wave is a system that aggregates content into a list of streams of information for the user. Traditional web demands browsing, but for phones or future HMD systems, this system of constantly switching and loading pages becomes impractical. Wave by comparison would let clients automatically download nearby data from the Waves the user has subscribed too.

3. Wave allows the realtime moving and updating of content. A 3D object could be moved in one client (if they have permission), and all the other clients subscribed to the wave would see the change happen in realtime.

Again, this happens regardless of the servers the other clients are connected too. As long as the servers are part of the federation, the changes will propergate to all the other servers in realtime.

4. Scalable. Because anyone can make a wave server and join the federation, the system can grow in proportion to its users. As demands for AR go up, with more advanced HMDs and more constant connections, having a system that doesn't require the use of a few central servers is going to be critical to keep the user experience as smooth as possible.

How it fits in with other technologies

WFP is not intended as a replacement for other protocols and nor does it remove the need for standardization of POIs and 3D data. It is intended to address a need in AR for a federated realtime aggravator of content - able to deliver a personal selection of layers from different sources without relaying on any single 3rd party.

As such its best to think of WFP akin to e-mail, a different system to exchange data, while being neutral to the content of the data itself and still being able to link to statically hosted data over http.

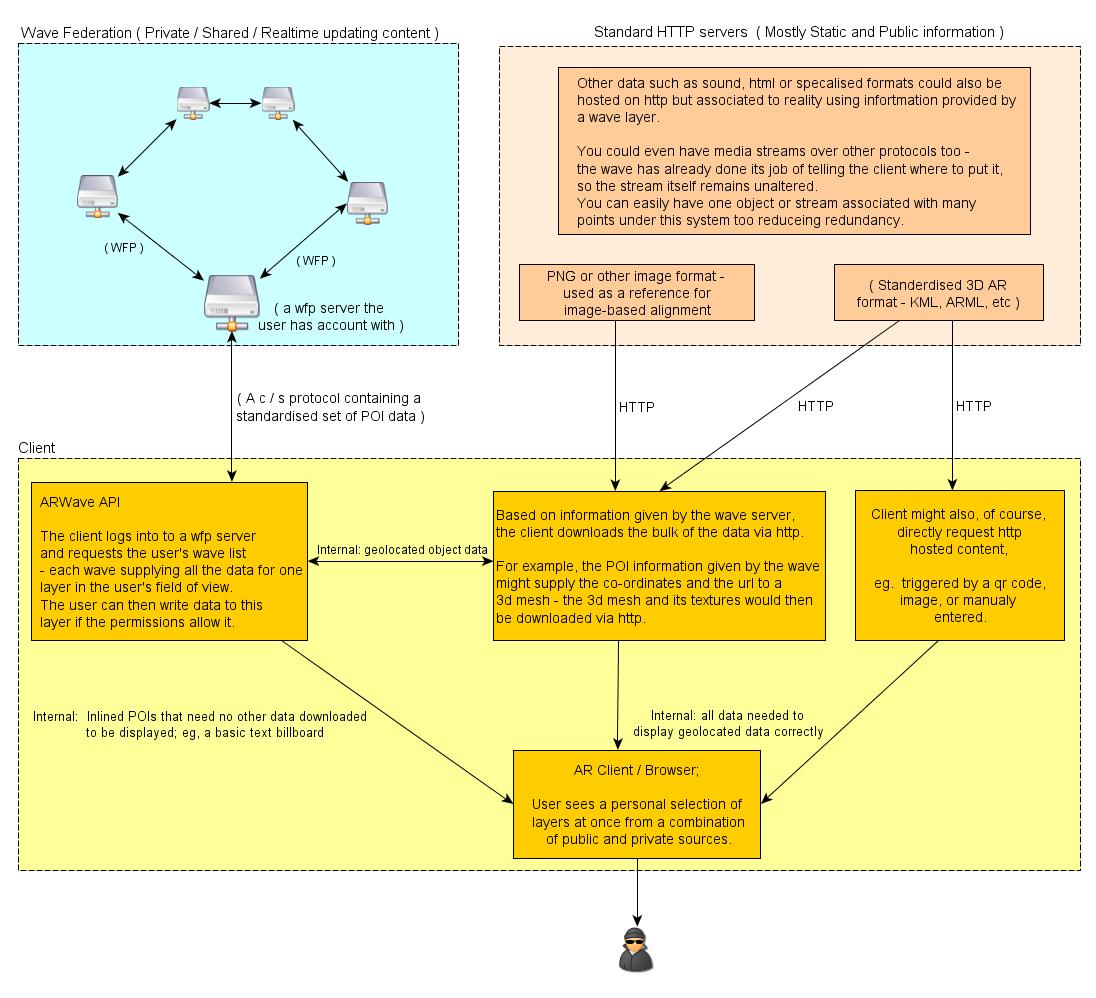

The following diagram shows how a WFP servers and HTTP work together;

It might also help to think of wave as streaming the small bit of information about where to put something with standard HTTP supplying the actual content to place.

(the exception to this being small pieces of geolocated text which could be supplied by the wave server directly for efficiency and simplicity's sake)

Basic Principles of using Wave for AR

Using WFP for AR data is, essentially pretty simple and consists of 3 stages;

A Link between a 3d object A Collection of these The users field of view,

and a real world location 3D Objects links consisting of many

or image is specified. forms a layer layers

(Stored as A Blip - A single

unit of data created by one or

more users) (A Wave - a collection of blips) (All the users subscribed waves)

1. Each blip forms a "physical hyperlink". A link between real and virtual data.

This link consists of all the data needed to the position arbitrary data either in a fixed real-world co-ordinate system, or in a co-ordinate system relative to a trackable image/marker.

For more notes see next section.

The data itself can be as simple as text, inlined into the blip, or remotely linked content such as 3D meshes, sound or other constructs. (this content could be hosted locally or elsewhere, and downloaded via standard http)

The principle of using WFP for AR Data exchange is natural to the type of data you are linking too; standards would have to emerge for precisely what 3d objects, or 3d markup, is renderable by the end clients.

2. A wave is a collection of blips, in AR this would represent a single layer over the users viewport.

Standard Wave server functions allow the subscribing, creating, and sharing of waves with others. Each Wave can have one or more blips created by one or more people.

By using AR within Wave, it would allow the end-users the same freedom to create their own content, and collaboratively edit it with friends.

Waves can also have bots added to them that are free to manipulate the Blip data. This allows interactive and game-functionality.

No extra protocol work is needed on this level, as this is all native WFP functionality.

3. The end client would render all the users' Waves as layers in their field of view. Giving them a personal aggregation of public and private content.

Specific example of key/value pairs that could be stored to position AR Content.

The key/value pairs stored would be the required information to allow a client to position any data at a real world location.

The data itself could be anything; ARWave is a proposal for how to exchange the positioning information; not a specification for what that data being positioned is. Various formats for that would have to be agreed and standardised separately.

Also, this is a preliminary list only shown here as an example. The precise key names and value formats should be agreed upon and standardised. In this way, anyone could create a client and be guaranteed of compatibility.

This list should also not be seen as a complete list of what's needed, other key/value pairs might be needed in future.

These key/value pairs would be stored as 'Annotations' in the blip specification. Annotations basically allow any arbitrary collection of key/value pairs to be stored.

|

Key

|

Value Description

|

|

|

Log

Lat

Alt

|

'double' type numerical values specifying Longitude, Latitude and Altitude to position the data. Alternatively, a single number+offset could be used if this proves more practical.

|

|

Roll

|

rotation around the front to back (z) axis. (Lean left or right.)

|

|

Pitch

|

degrees rotation around the left to right (x) axis. (tilt up or down aka elevation)

|

|

Yaw

|

degrees rotation around the vertical (y) axis. (relative to magnetic north, aka bearing)

|

|

Data Reference Link

|

Instead of a fixed position specified by the above, data can be positioned by an image specified here. The image orientation determines its position and rotation in space.

If both a numerical position and a image-link is specified, then the position is considered as a offset to the tracked image.

|

Co-ordinate System

|

A string specifying the co-ordinate standard used for the above.

|

|

|

Data MIMEType

|

The MIME type of the data linked too. (the data is not necessary 3d mesh, but could be sound, text, markup etc)

|

Data / Data URI

|

A link to the data resource being positioned. This link could be normal static-ip hosted http server, but could also be temporary hosted by the client as a ip and port-number.

|

|

|

DataUpdateTimestamp

|

The last time the linked data was updated.

|

Metadata

|

Metadata could be a single string field of descriptive tags, or (more usefully) a separate set of key/value pairs with a common starting patern to help form a more detailed semantic description of the object linked too.

|

Many of the k/v pairs on this list would also be optional, depending on the situation. A piece of inline text content, which is stored inline in the blips content field, would not need a http link to its data, for example.

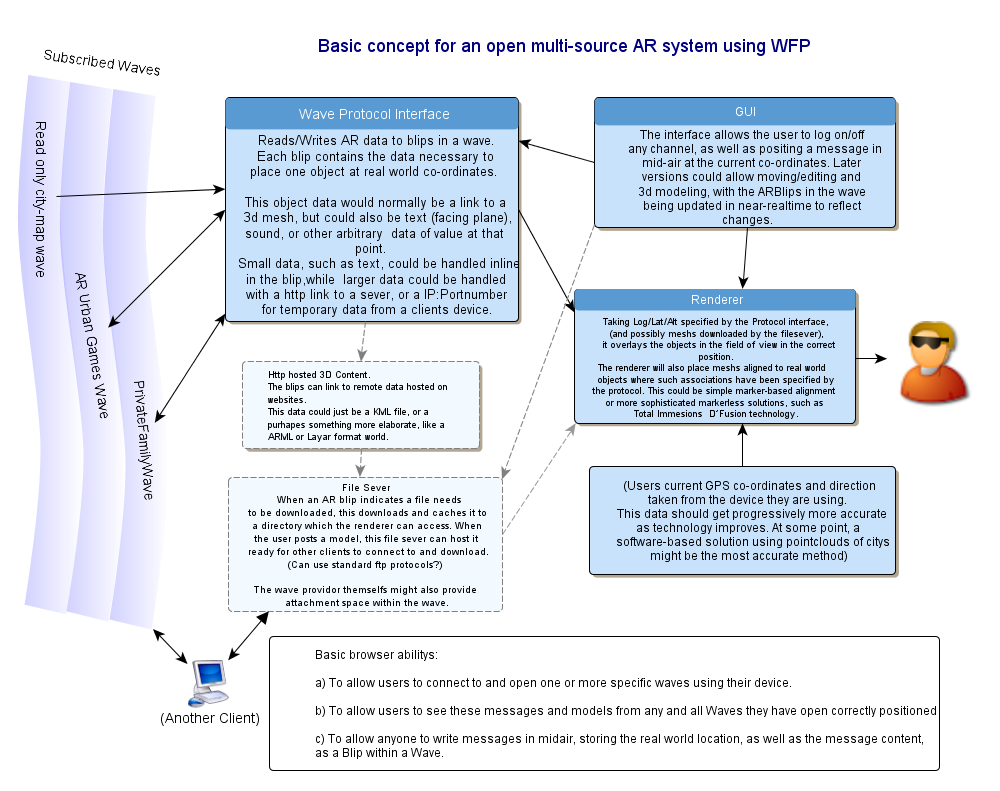

Example schematic of how a WFP AR Client could work;

Essentially a wave api would return, and keep updated, a set of AR-formatted blip data from the users waves. The client would then download any other needed data, and render the results in the users field of view.

Additional Resources

ARWave organisation homepage (including videos of our Android client and a lot more information) -

FAQ:

http://lostagain.nl/websiteIndex/projects/Arn/information.html

International AR Standards Meeting-February 17-19 2011